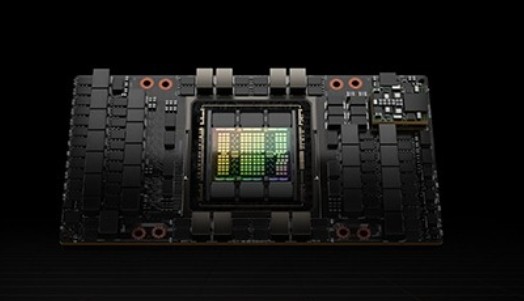

NVIDIA H100 Tensor Core GPU

orm Factor H100 SXM H100 PCIe H100 NVL1 FP64 34 teraFLOPS 26 teraFLOPS 68 teraFLOPs FP64 Tensor Core 67 teraFLOPS 51 teraFLOPS 134 teraFLOPs FP32 67 teraFLOPS 51 teraFLOPS 134 teraFLOPs TF32 Tensor Core 989 teraFLOPS2 756 teraFLOPS2 1,979 teraFLOPs2 BFLOAT16 Tensor Core 1,979 teraFLOPS2 1,513 teraFLOPS2 3,958 teraFLOPs2 FP16 Tensor Core 1,979 teraFLOPS2 1,513 teraFLOPS2 3,958 teraFLOPs2 FP8 Tensor Core 3,958 teraFLOPS2 3,026 teraFLOPS2 7,916 teraFLOPs2 INT8 Tensor Core 3,958 TOPS2 3,026 TOPS2 7,916 TOPS2 GPU memory 80GB 80GB 188GB GPU memory bandwidth 3.35TB/s 2TB/s 7.8TB/s3 Decoders 7 NVDEC

7 JPEG7 NVDEC

7 JPEG14 NVDEC

14 JPEGMax thermal design power (TDP) Up to 700W (configurable) 300-350W (configurable) 2x 350-400W

(configurable)Multi-Instance GPUs Up to 7 MIGS @ 10GB each Up to 14 MIGS @ 12GB

eachForm factor SXM PCIe

dual-slot air-cooled2x PCIe

dual-slot air-cooledInterconnect NVLink: 900GB/s PCIe Gen5: 128GB/s NVLink: 600GB/s

PCIe Gen5: 128GB/sNVLink: 600GB/s

PCIe Gen5: 128GB/sServer options NVIDIA HGX H100 Partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs NVIDIA DGX H100 with 8 GPUs Partner and

NVIDIA-Certified Systems

with 1–8 GPUsPartner and

NVIDIA-Certified Systems

with 2-4 pairsNVIDIA AI Enterprise Add-on Included Included

1. Preliminary specifications. May be subject to change. Specifications shown for 2x H100 NVL PCIe cards paired with NVLink Bridge.

2. With sparsity.

3. Aggregate HBM bandwidth.

Take a deep dive into the NVIDIA Hopper architecture.

sales

sales